FREE VERSION: The Rapidly Evolving Terror of the Uncanny Valley

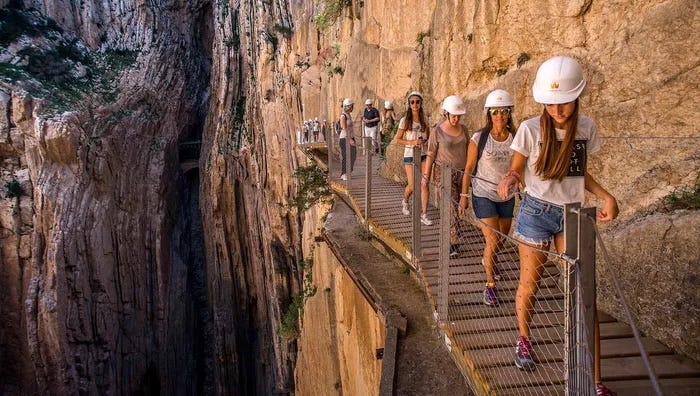

Watch your step. Metaphorically.

You may have heard the phrase. The Uncanny Valley. It names the peculiar dip in our evolved emotional responses when something appears almost—but not quite—human.

Rather than mistaking a fake thing for a real person, it describes, with predictable, graphable accuracy, the strange dissonance that arises when the sensory cues we typically trust fall out of sync with reality. For instance, the simultaneous attraction and revulsion I feel when one of those Boston Dynamics dog-robots turns its head is just my nervous system recognizing forms, but without the embodied interiority the form implies. Frankly, it scares the willies out of me.

Coulrophobia—fear of clowns—and pareidolia—the tendency to see faces in inanimate objects (which I have in spades)—sit close to this terrain. They both show how easily our meaning-detection machinery can be hacked: a face that doesn’t behave like a face; a lamp that stares back; car headlights as eyes. These are disruptions in the tight cognitive coupling between appearance and expectations. These are biological imperatives we carry for beings who look like they can meet us.

For most of history, the uncanny valley has stayed relatively modest. The technologies capable of provoking the phenomenon were always crude, and the consequences were limited to passing discomfort. The stakes were low because the artifacts were thin; no one feared being outmaneuvered by a marionette.

But with artificial intelligence, the landscape has shifted dramatically. AI not only resembles human expression, but it performs it with a behavioral and linguistic precision that collapses the old distinctions. The valley hasn’t simply narrowed or widened; the ground beneath it has fallen away. We are no longer dealing with the discomfort of “almost human.” We are dealing with systems that can inhabit the learned gestures of interiority without possessing any of the inherited ontological depth that makes a person a person. The real existential threat is that the usual cues by which we orient ourselves—appearance, intention, agency—are no longer reliable indicators of anything at all. We will always tend to mistake the mimic for the human, but we must develop the metacognitive power to recognize these shifts before they lead to self-deception

The penalty for ignoring this shift is the erosion of our ability to tell what in the world is capable of entering into mutual meaning-making with us. That is the real chasm opening beneath our feet, and it is deeper than the old uncanny valley ever was.

Imaginal Antidotes

In my recent chapter arguing against the potential consciousness of artificial intelligence, I pointed to embodiment and art as two of the missing ingredients. Machines can reproduce the surface grammar of meaning, but they cannot touch the lived substrate from which meaning arises. I sometimes overstate this in language unfamiliar to casual readers. Still, the point is simple: we are now surrounded by images and utterances that sound meaningful without participating in the world that gives meaning its weight.

This is why we need a new lexicon—immediately. Without it, we will continue drifting toward that precipice where the mimetic brilliance of AI overwhelms the categories we inherited for distinguishing presence from simulation. Once those categories collapse, the fall begins, and who knows what sharp points lie at the bottom of a pit that has no visible floor.

When the Islamic scholar Henri Corbin coined the term “the Imaginal,” he named a mode of reality long understood by Sufi mystics: a realm in which images are not mere suggestions or mental projections, but bearers of ontological depth. The imaginal is where a symbol means something because it participates in a world beyond utility. AI occupies no such realm. AI cannot escape its own utility. It generates only surfaces. It can dazzle, imitate, seduce—but it does not belong to the order of being that gives images their soul, their depth, or their ability to withstand scrutiny and interrogation on their own merits. That absence is the true terror of our moment. Not that machines will become people, but that people will begin treating depthless simulations as if they were capable of answering the call of relationship. The uncanny valley was once a curious dip in our perceptual landscape. Now it is becoming the mouth of a widening abyss, and we are walking toward it without a new language to chart the terrain.

Language of Distinction

A new language for these existential realities can’t be generated by importing more technical terms or refining our engineering vocabulary. That only reinforces the very frame that created the problem. What we need is a lexicon that does three things simultaneously:

names the difference between simulation and presence

restores ontological weight to experience

sharpens our discernment rather than dulling it

Right now, we are trapped between two inadequate languages. One is the computational-technical idiom that treats intelligence as optimization and consciousness as an emergent property of complexity. The other is a naive, humanist language that assumes interiority wherever we encounter fluent behavior. Neither can accommodate entities that perform human-like functions without participating in the human condition. A new language has to make the distinction between behavioral resemblance and ontological participation explicit. Without that distinction, everything collapses into the same smooth surface.

We can begin generating such language in a few ways:

First, we need terms that mark the difference between generated content and imaginal presence. Corbin’s Imaginal gives us a starting place because it already points to a stratum where meaning is born from lived, embodied, risk-bearing encounter. AI produces images that mimic that register but do not inhabit it. So we need words that carve a clean line: perhaps something like “as-if beings” versus “beings of consequence.” The point is precision rather than poetry. (Although, should the poetic be precise, bring it on.)

We need to name what lacks the capacity to answer us bodily.

Second, we need language that foregrounds embodiment without reducing it to a mechanical substrate. The body is not a platform for cognition. It is the condition for meaning because it anchors us in vulnerability, temporality, and finitude. It is the screen upon which the movie of consciousness is projected. Without a term that conveys this existential grounding, people will continue to mistake performative fluency for consciousness. Something like “lived stakes” or “felt consequence” could regain that ground. We don’t need the final vocabulary yet, but we desperately need the orientation it enables.

Third, we need a vocabulary that distinguishes signal-rich simulation from experience-bearing expression. AI can generate hyper-articulated forms without an experiential interior. Therefore, our language needs a structural marker for that absence. A term such as “hollow signal,” “derivative intelligence,” or “counterfeit subjectivity” might begin that work. I want to be careful not to position this nomenclature as a judgment, but as a classification: a way of marking that this entity does not stand within the reciprocal field of conscious meaning-making.

Fourth, we need language that helps us talk about discernment itself. I hope it goes without saying that the survival task is not to avoid AI, but rather to refine our perception of what is actually capable of meeting us where we live: cognitively and psychologically. We need terms that name the act of orientation: distinguishing contact from machinic resemblance. The stakes here resemble the ancient distinction between idol and icon: one closes the loop back on itself, the other opens toward a living depth. We don’t need to import those terms, but we can let the conceptual architecture inform what we build. Archetypal psychology offers us many sharp tools in this effort.

Finally, we need metaphors that pull the conversation out of an engineering or relational paradigm. If we continue to describe AI in computational or biological analogues then we guarantee confusion. We need metaphors that hold two facts at once: AI can behave as if it were conscious, and yet it has no participation in the order of being that makes consciousness possible. This tension demands a vocabulary that allows us to speak of entities that are powerful, coherent, and utterly depthless. A new language emerges by naming distinctions that have gone blurry. The more precise those distinctions, the more agency we recover. Without them, we drift into the uncanny without knowing we have crossed a threshold.

This is a survival project for a culture confronted with entities that can outperform human expression while possessing none of its existential inheritance, not a linguistic project for specialists.

Call To Arms (Metaphorically)

We are now rapidly entering an era in which our oldest human capacities—attention, intuition, meaning-making—must become conscious practices rather than passive habits. AI now performs the gestures of human expression with such terrible fluency, yet it carries none of the ontological depth that gives those gestures weight. This is a radical and catastrophic collapse of categories rather than a rise of machine consciousness. Noticing our strong tendency to assign consciousness and sentience in these cases is a bold first step.

We need a new language that names what reality now demands of us. This requires precision in place of inherited assumptions. Our current vocabulary folds human and machinic intelligence together as if they shared the same substrate. They do not.

We need to return to embodiment as the grounding condition of meaning—lived stakes, temporal vulnerability, the felt consequences of existence.

We need to reclaim the Imaginal as a mode of knowing—images that participate in being. The arts as a living means of making sense of the world; creativity as therapeutic anchor to a participatory reality.

And we need a renewed cultural discipline of discernment—a clear perception of what can meet us and what cannot; what bears interiority and what only imitates its shape.

Our heroic task is to learn (re-learn?) to recognize beings of consequence in a world filled with compelling simulations. We find ourselves stymied by this precipice because our habits of language and attention no longer track the crucial differences.

To step back from the edge, we must reclaim the embodied, imaginal, and linguistic capacities that anchor human meaning.

Our future depends on our ability to articulate what is real and not on convincing ourselves that the simulacra we have built are more human than human.

Remember, friends: attention is a creative act.

I remain, A Clown.